Virtuoso#

- User needs: Linked data hosting, querying solution

- User profiles: Business Users, Data Analysts, Data Scientists, Data Engineers

- User assumed knowledge: Linked data fundamentals

With Windows Virtual Desktops (WVDs) you can connect via a web client to your virtual desktop, a personal workspace in the cloud. The desktop is associated to your user and has access to the data science labs (DSLs) you are permissioned to access. All private resources in a DSL can be accessed via their private IP addresses from your WVD.

Onboarding#

This document describes how users interface with the Virtuoso building block, and as such is intended for users who have onboarded onto the EC Data Platform Linked Data offering. More on this can be found on the dedicated page.

Uploading triples#

Prerequisites#

In order to make your linked data available for querying, you will need to provide an export of the graph(s) you would like us to host in one of the supported formats. These formats are the following:

- Geospatial RDF (.grdf)

- N-Quads (.nq)

- N-Triples (.nt)

- OWL (.owl)

- RDF/XML (.rdf)

- TriG (.trig)

- Turtle (.ttl)

- RDF/XML (.xml)

These can be provided in a gzip compressed format (marked by the additional .gz extension).

As the upload and log inspection require invoking an AWS API, you'll need to have a working installation of the AWS CLI. Instructions on the official AWS documentation

Furthermore, the user will require AWS credentials to perform the upload.

The exact process through which you can obtain AWS credentials to perform programmatic upload of your linked data is described on this page.

To configure the AWS CLI to make its calls using the provided credentials, run the aws configure command.

Lastly, the user will have been provided with the name of the bucket to which to upload their files during the onboarding process. If this is not the case, please reach out to the support team.

Uploading your file to S3 using the AWS CLI#

The command to upload your file once you have obtained your AWS credentials is

aws s3 cp <yourfile.nq.gz> s3://<bucket-name>

Substitute <yourfile.nq.gz> with the file you are looking to upload from your local machine, and <bucket-name> with the name of the bucket provided to you during the onboarding process.

Uploading your file to S3 using Amazon's SDK (Boto3)#

The following snippet will upload a local file to S3 using Python. It can give you some ideas as to the general process. Please note the AWS SDK is available for most major programming languages.

Refer to official AWS documentation here: https://aws.amazon.com/tools/

import boto3

from botocore.exceptions import NoCredentialsError

ACCESS_KEY = 'XXXXXXXXXXXXXXXXXXXXXXX'

SECRET_KEY = 'XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX'

#Enter your obtained credential keys in the above.

def upload_to_aws(local_file, bucket, s3_file):

s3 = boto3.client('s3', aws_access_key_id=ACCESS_KEY,

aws_secret_access_key=SECRET_KEY)

try:

s3.upload_file(local_file, bucket, s3_file)

print("Upload Successful")

return True

except FileNotFoundError:

print("The file was not found")

return False

except NoCredentialsError:

print("Credentials not available")

return False

uploaded = upload_to_aws('local_file.nq', 'bucket_name', 's3_file_name.nq')

Substitute required variables in the above script, i.e.:

- input your access and secret key

- add under the "uploaded-section" the name of you local file (e.g. '

.nq'), the name of the S3 bucket you are uploading to (e.g. 'cordis-test-virtuoso-graph-ingest'), and the name you want the file to have in the S3 bucket (e.g. 'example_filename.nq'). Please make sure that the file on S3 has an extension (usually .nq) that matches the file format.

Furthermore, note that you are free to specify any graphname you require. Nevertheless, we would like to mention that you can add maximum 1 file per target graph.

For production use, we highly advise dynamically inserting your access_key secrets from an appropriate secret store, rather than hardcoding them in.

Please find more information regarding how to upload a file to Amazon S3 using python here.

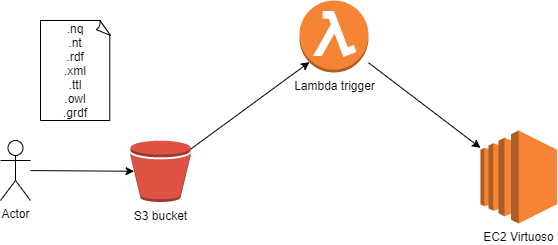

The architecture#

Once the upload is finished, a Lambda function is triggered, which will instruct the Virtuoso server to download the file onto its local storage, and begin loading it into the database. This entire process is automated.

The process can take up to several hours for very large uploads (north of 100M triples). You can query the SPARQL endpoint as described below to view the progress of the ingestion.

In the case of very large loads, please let the technical team know in advance so they can monitor the process.

Inspecting log files using the AWS CLI#

The ingestion pipeline pipes the outputs of the loader to log streams that can be inspected. The same credentials as the ones used to upload your graph files to S# can be used to query these log streams.

The syntax for querying these streams is as follows:

aws logs describe-log-streams --log-group-name /virtuoso/<YOUR_SUFFIX_CODE>

aws logs get-log-events --log-group-name /virtuoso/<YOUR_SUFFIX_CODE> --log-stream-name <YOUR_LOG_STREAM_NAME>

The suffix code to complete the query will be granted to you during the onboarding process.

The log stream name can be acquired from the output of the describe-log-streams command.

Inspecting log files using the AWS SDK (Boto3)#

Next to using the AWS CLI, logstreams can be inspected using the AWS SDK.

The following snippets will read requested log files using Python. They can give you some ideas as to the general process. Please note the AWS SDK is available for most major programming languages.

Refer to the official AWS documentation or to Boto3 Documentation for more information.

Note that log files are stored in a "log group" which includes multiple "log streams". A log stream is a sequence of log events that share the same source.

First, the log streams in a log group can be read:

import boto3

# Make sure to import Boto3, which is the AWS SDK for Python

client = boto3.client('logs')

# Connect to AWS cloudwatch logs, the service were the logs are stored.

stream_response = client.describe_log_streams(

logGroupName='/virtuoso/<YOUR_SUFFIX_CODE>', # Enter the suffix code received during the onboarding process

orderBy='LastEventTime', # For the latest events

descending=True, # Descending order

limit=30 # Amount of logs you want returned

)

print(stream_response)

# Requested logs will be shown on your screen

Next, in case you want to deep dive into one of the log streams and read the log events:

import boto3

# Make sure to import Boto3, which is the AWS SDK for Python

client = boto3.client('logs', region_name='eu-west-1')

# Connect to AWS cloudwatch logs, the service were the logs are stored.

response = client.get_log_events(

logGroupName='/virtuoso/<YOUR_SUFFIX_CODE>', # Enter the suffix code received during the onboarding process

logStreamName='logStreamName', # Enter the name of a log stream, aquired from the output of previous script.

limit=100, # Amount of logs you want returned.

)

log_events = response['events'] # Storing the queried log events for output.

for each_event in log_events:

print(each_event)

# Log events within the log stream will be shown on your screen.

Querying#

Data Platform offers a publicly available SPARQL endpoint for querying your data. At the time of writing this endpoint is https://linked.ec-dataplatform.eu/sparql for the production cluster, and https://test-linked.ec-dataplatform.eu/sparql for the test standalone instance.

An introduction to the SPARQL query language is considered out of the scope of this user documentation. A few very basic queries to get you started:

Count triples in a given graph#

SELECT *

FROM <NAME_OF_GRAPH>

WHERE {?s ?o ?p}

List all graphs on the instance ordered by size#

SELECT ?graph (count(?s) as ?count)

WHERE { GRAPH ?graph { ?s ?p ?o } }

GROUP BY ?graph

ORDER BY DESC(?count)