Apache Airflow#

Apache Airflow allows to programmatically author and schedule workflows and monitor them via a built-in Airflow user interface. Airflow is written in Python and is designed under the principle of "configuration as code".

Airflow exists of multiple building blocks such as:

- A DAG (a Directed Acyclic Graph) which represents a workflow, and contains individual pieces of work called Tasks, arranged with dependencies and data flows taken into account

- A scheduler, which handles both triggering scheduled workflows, and submitting Tasks to the executor to run

- An executor, which handles running tasks

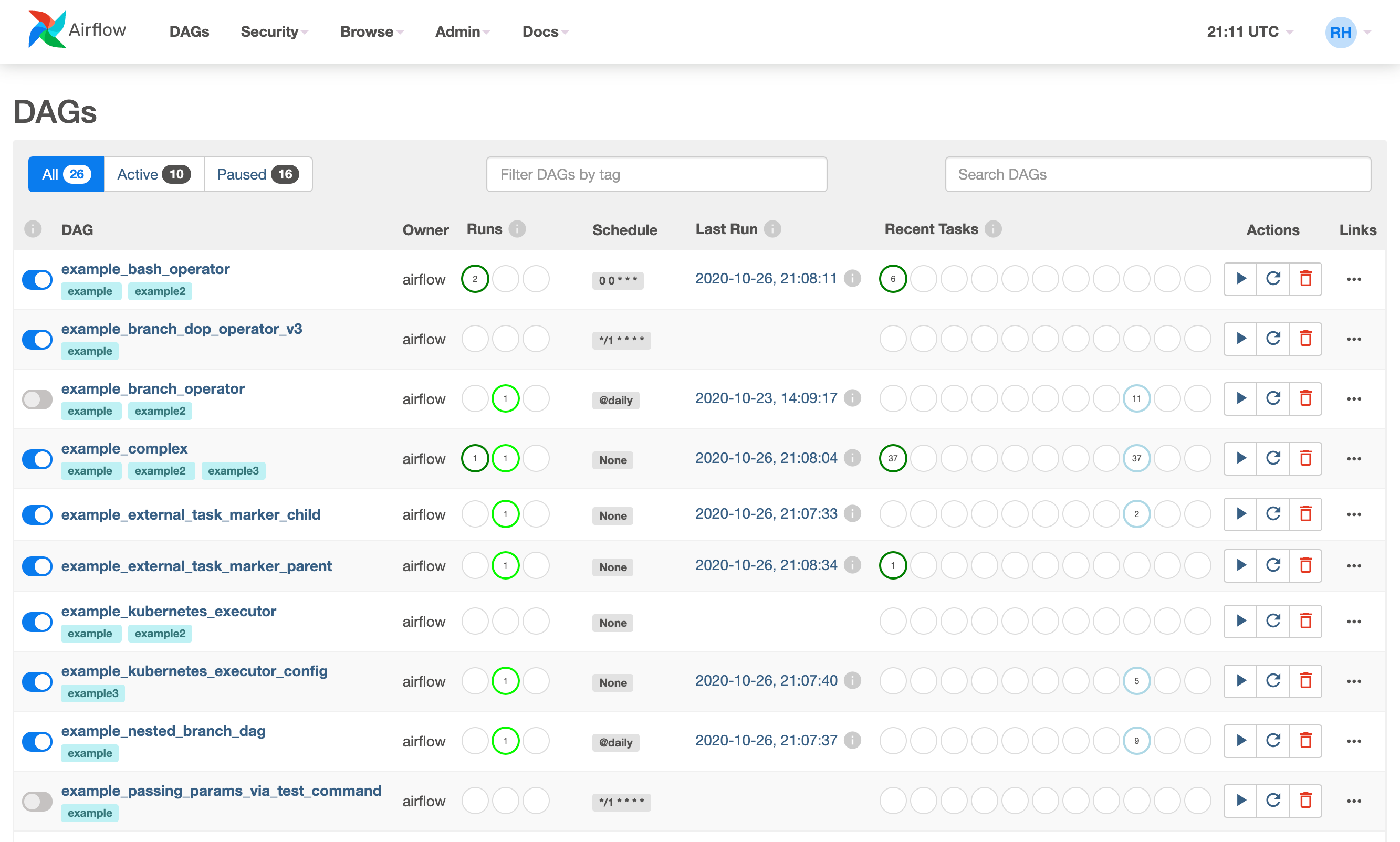

- A webserver, which presents a handy user interface to inspect, trigger and debug the behaviour of DAGs and tasks

- A folder of DAG files, read by the scheduler and executor

- A metadata database, used by the scheduler, executor and webserver to store state

- A built-in Airflow user interface (multiple Airflow UI visualizations exist)

Please find the different Airflow UI visualizations here.

Features#

Find below some of the main features of Apache Airflow:

- A useful UI: making it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed

- Robust Integrations: Apache Airflow allows many plug-and-play operators that are ready to execute your tasks on third party-services such as Apache Spark, MongoDB, and many more

- Easy to use: easily deploy python-based workflows, built ML models, transfer data, manage infrastructure, and so on

Use Cases#

Find below some examples of possible use cases:

- Building an orchestration engine (scheduling and executing various types of workflows)

- Orchestrating SQL transformations in databases

- Orchestrating functions against clusters for ML models

- Increasing the visibility of batch processes and decoupling batch jobs

Resources#

Find below some interesting links providing more information on Apache Airflow: